OpenClaw and the Rise of the 'Real' AI Assistant

An honest look at OpenClaw, the open-source AI personal assistant generating real excitement. What it does, what I learned running it, and why it matters for the future of enterprise AI.

Like a lot of people, I've been closely following the recent buzz around OpenClaw, an open-source AI personal assistant that's generating real excitement in the developer community.

And honestly, it makes sense.

Most of us have been promised "smart assistants" for over a decade. We were told Siri, Alexa, and Google Assistant would manage our lives. Instead, we got tools that can set timers, mishear us, and occasionally turn on the wrong lights.

So when something like OpenClaw shows up promising a truly capable personal AI agent, people pay attention.

Over the past few weeks, I've been running OpenClaw at home. My honest assessment: it's very early-stage software, not ready for most people to use safely. But the potential here is enormous.

What Is OpenClaw, Really?

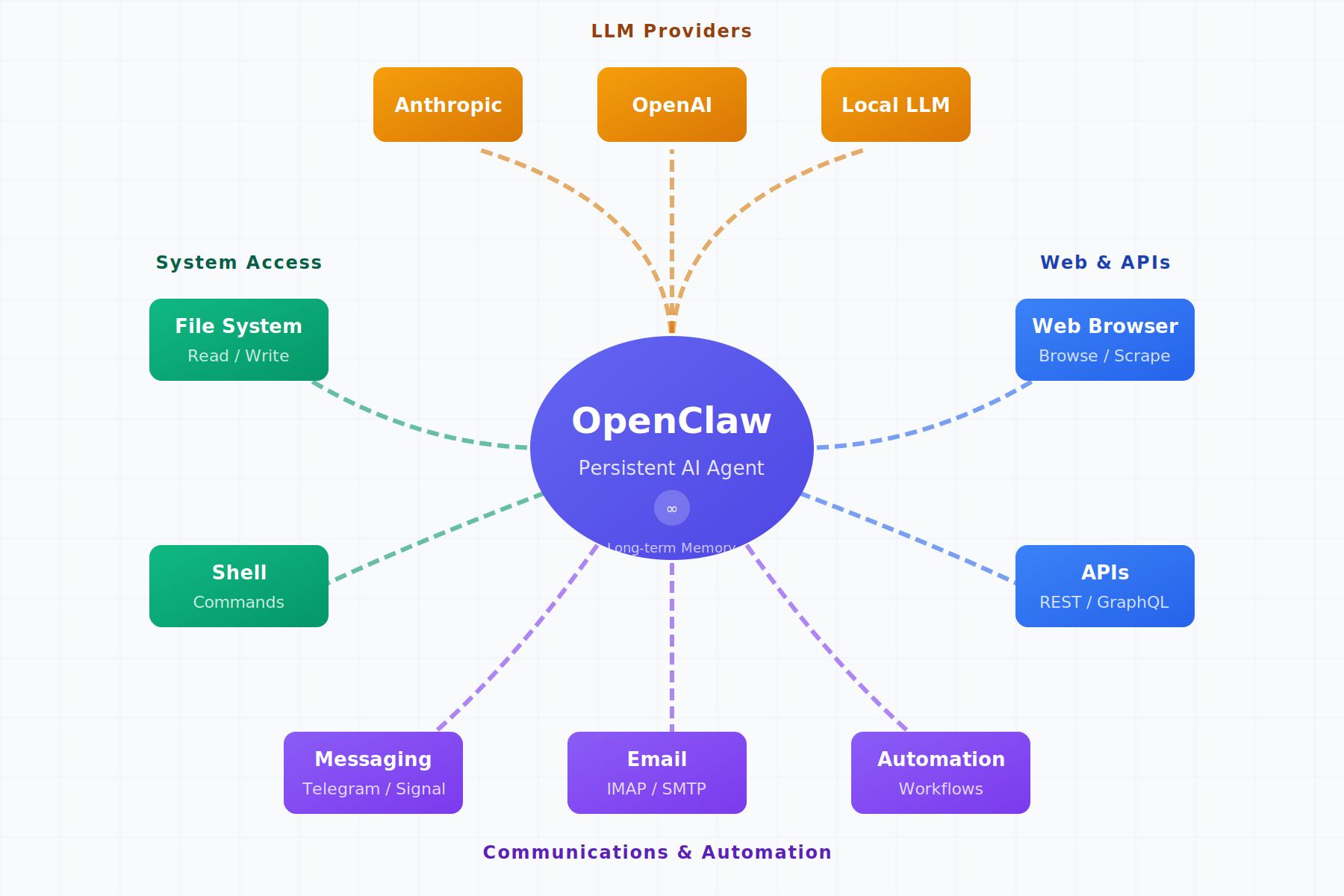

At its core, OpenClaw is a persistent AI agent that runs as a background service on your machine. Once installed, it operates continuously, maintaining long-term memory and context. It can connect to large language models from providers like Anthropic, OpenAI, and Google, as well as locally hosted models.

But this isn't just "ChatGPT with a wrapper."

OpenClaw can access your file system, execute shell commands, browse the web, call APIs, read your email, and trigger automation tools. It doesn't just talk. It sees. It remembers. And it acts.

OpenClaw sits at the center, connecting to LLM providers, your file system, shell, browser, APIs, and messaging platforms.

OpenClaw sits at the center, connecting to LLM providers, your file system, shell, browser, APIs, and messaging platforms.

The key differentiator is persistence. Your sessions don't disappear when you close a window. The agent keeps context over time, learns your environment, understands patterns, and builds continuity.

That's the difference between a chatbot and a digital coworker.

# Example OpenClaw configuration (simplified)

agent:

persistence: true

memory_store: local

providers:

- name: anthropic

model: claude-sonnet-4-20250514

api_key: ${ANTHROPIC_API_KEY}

integrations:

filesystem:

enabled: true

sandboxed: true

allowed_paths:

- ~/projects

- ~/documents

messaging:

telegram:

enabled: true

webhook_url: ${TELEGRAM_WEBHOOK}

What I Actually Tried

Over two weeks, I tested OpenClaw on practical workflows. I had it monitor a project folder and summarize changes daily. I used it to draft commit messages based on diffs. I tested the Telegram integration for remote file lookups while away from my desk.

The results were inconsistent but occasionally impressive. When it worked, it felt like having a junior developer who never sleeps. When it didn't, it reminded me why we're still in the early innings of this technology.

From Assistant to Autonomous Agent

Where things get even more interesting (and more concerning) is OpenClaw's ability to integrate with messaging platforms like WhatsApp, Telegram, and Signal. With the right configuration, you can message your assistant remotely and trigger actions.

Imagine this: you're away from home, you send a message, and your AI agent turns on lights, checks files, runs scripts, or initiates workflows.

You're no longer "using" software. You're delegating to it.

At that point, OpenClaw stops being a tool and starts becoming an autonomous agent. That's incredibly powerful. It's also dangerous.

Power Comes With Real Risk

Giving software deep access to your system, data, and communication channels is not a trivial decision. OpenClaw can read sensitive files, execute system commands, access private communications, and trigger automated actions. If something goes wrong (whether through a bug, misinterpretation, or malicious prompt) the consequences could be real.

Right now, major questions remain unanswered around security boundaries, permission models, auditability, data governance, abuse prevention, and failure modes.

Because of that, I would strongly caution against running this on your primary personal machine. If you want to experiment, do it in a sandbox. Use a separate device. Keep personal and financial data out of reach. Understand exactly what you're enabling.

This is not plug-and-play consumer software yet. This is experimental infrastructure. Treat it that way.

Why This Still Matters

With all that caution, you might wonder why I'm excited about this at all.

Because OpenClaw shows us what's coming.

For the first time, we're seeing practical demonstrations of what happens when you combine large language models with long-term memory, giving the agent continuity across sessions and the ability to learn your patterns. Add tool access, and it can actually do things in the real world, not just talk about them. Layer in automation and messaging interfaces, and suddenly you can delegate tasks remotely. Wrap it all in a persistent identity, and you have something that feels less like software and more like a collaborator.

| Capability | Traditional Assistants | Agent-Based Assistants |

|---|---|---|

| Persistence | Session-based, context lost | Continuous, maintains history |

| Memory | Minimal, starts fresh | Long-term context retention |

| Actions | Predefined skills only | Arbitrary tool access |

| Integration | Walled garden ecosystems | Open APIs and local tools |

| Autonomy | Reactive to commands | Proactive capable |

That combination creates something fundamentally new. Not a chatbot. Not a search engine. Not a voice assistant. A true digital agent.

Once people experience that, there's no going back. The question is no longer "Will this exist?" It's "Who will build it responsibly?"

A Path Forward: Enterprise-Grade Agents

I've been a big fan of Claude Code and have enjoyed watching its evolution, especially with the emergence of Claude Cowork.

What excites me is the possibility that companies like Anthropic can take the raw ideas being explored in projects like OpenClaw and bring them into secure, governed, enterprise-grade environments. Imagine strong permission models with transparent audit logs. Sandboxed execution with human-in-the-loop controls. Compliance by design with safety-first defaults.

That's how this becomes trustworthy. That's how it becomes scalable. That's how it becomes something people can rely on, not fear.

Final Thoughts

OpenClaw today is rough, unstable, risky, and unfinished.

But it's also a preview.

It's a glimpse into a future where AI doesn't just answer questions. It collaborates. It remembers. It executes. It operates alongside us. We are moving from "AI as a tool" to "AI as a partner."

That transition will be messy. It will create new risks. It will force new governance models. It will demand better engineering discipline.

But it's coming. And projects like OpenClaw are showing us what that future might look like, for better and for worse.

If you're curious, explore carefully. If you're building, build responsibly. If you're leading, start thinking now about what this means for your organization.

The first generation of real AI assistants is here. They're clumsy. They're dangerous. They're incomplete. And they're going to change everything.

Share this post

Related Posts

The Companies Spending the Most on AI Have the Most to Gain From Convincing You It Will Take Your Job

The companies spending the most on AI have the most to gain from convincing you it will take your job. Most conversations about AI and jobs get framed one of two ways: inevitability or competition....

The Open Source Paradox AI Created

Generative AI is accelerating open-source adoption while quietly breaking the economic models that sustain it. This is not a tooling problem. It’s a policy and incentive failure.

Building StillView 3.0: Raising the Quality Bar with Claude Code and Opus 4.5

Using Claude Code and Opus 4.5 as thinking partners helped me rebuild confidence, clarity, and quality in a growing macOS codebase.