The Rise of MCP Servers: Why Every Developer Will Have a Personal AI Toolchain

From AWS to GitHub to Podman, MCP servers are quietly becoming the new plug-in ecosystem for developers—and it’s changing how we work.

If you’ve been paying attention to the Model Context Protocol world lately, you’ve probably noticed the same trend I have: suddenly every vendor, every open-source project, every platform team is shipping an MCP server.

This isn’t a coincidence. It’s a shift. And just like Docker in 2013 or GitHub Actions in 2019, we’re early enough to feel the energy but late enough to know this is not a fad.

🚀 A New Layer in the Dev Stack

The idea behind MCP is simple but game-changing, a standard way for AI agents and IDE-integrated assistants to talk to tools, services, APIs, docs, CLIs, and infra—without writing glue code, wrapping SDKs, or duct-taping local scripts together.

Instead of your editor “knowing about AWS,” you run an MCP server that exposes AWS actions. Instead of your AI agent hallucinating how to fix a Dockerfile, it literally asks the Docker MCP server.

That means:

- No more embedding secrets or tokens inside prompts

- No more “copy this JSON from the console into ChatGPT” workflows

- No more AI tools guessing—they execute

AI stops being an autocomplete engine and starts behaving like a teammate that can run code, read docs, take actions, and reason with context.

🧠 My Local MCP Stack

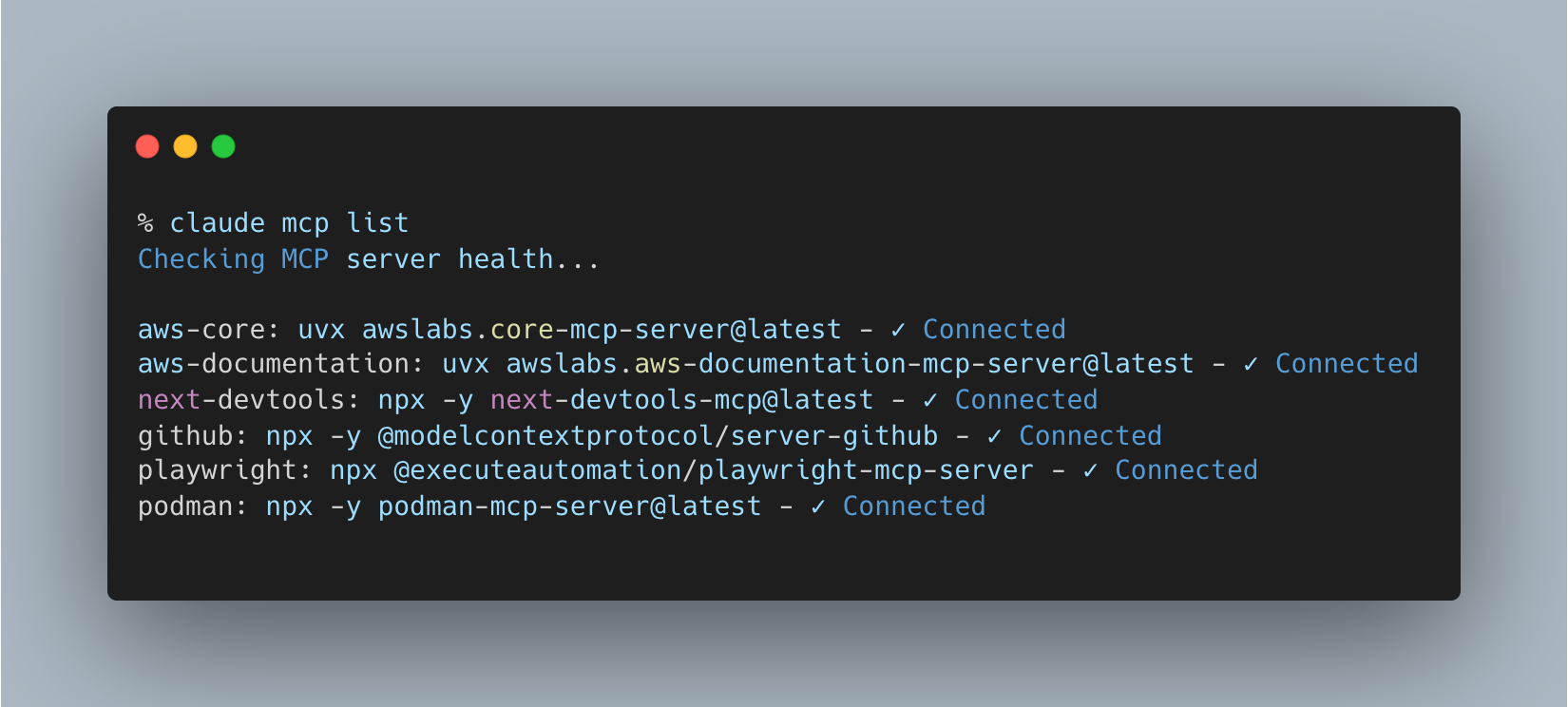

Here’s what’s currently running in my personal dev environment:

That’s not a toy setup. That’s my daily workflow.

aws-coregives my AI assistant real-time insight into AWS services, infra, config, and deploymentsaws-documentationeliminates “what’s the API for X again?” Google searchesgithublets me query PRs, issues, branches, test runsnext-devtoolsintegrates with local Next.js projects so I can refactor or debug with conversational commandspodmangives container-level visibility and controlplaywrightmeans “write me a test” turns into an actual file, not a suggestion

And all of it runs locally, not through a vendor cloud.

That’s a very different world from the one we lived in 18 months ago, where AI tools were mostly text predictors dressed up as copilots.

🔄 From “Chat About Code” to “Operate the System”

Before MCP, AI tools were like a junior dev who could explain things well but wasn’t allowed to log into production or touch a shell. Lots of talk, limited action.

Now? They’re becoming operators.

- Ask “what Lambdas were deployed last week?” → it runs an AWS query

- Ask “write an integration test for this API route” → it generates, validates, runs, and fixes

- Ask “clean up dangling containers and volumes” → Podman server handles it

The line between developer and developer-plus-agent is getting blurry.

The dream isn’t “AI replacing engineers,” it’s engineers spending less time clicking and more time creating.

🧩 The Implications

✅ Every SaaS tool will ship an MCP server

✅ Every platform team will build internal MCP endpoints

✅ Every IDE will become “AI-native” by speaking MCP

✅ Every dev will eventually have a personal AI runtime environment—just like we all ended up with Homebrew, Git, Docker, and a password manager

The real unlock is not that AI can read your code but that it can act on your environment with the same privileges you have, with guardrails you define.

This is the shift from “AI as a chat tab” to “AI as part of the operating system.”

🔐 A Quick Reality Check: Security Isn’t Fully Solved Yet

Of course, anytime a new layer gets added to the developer stack—especially one that can execute real actions against cloud resources, source control, and production systems—the security folks start sweating. And right now, they’re not wrong.

MCP servers are still early in how they handle:

- Auth and permission scoping (most are running with full trust, not least privilege)

- Secret management and token handling (too many

.envfiles, not enough vaults) - Auditability and action logging (great for devs, uncomfortable for compliance teams)

- Cross-tool privilege escalation (an MCP server that can call another MCP server has real blast-radius potential)

We’re in the “ssh into prod with a shared key” phase of the curve, not the “Zero Trust, signed identity, fine-grained RBAC” phase.

But that will come fast — because the productivity upside is too massive, and nobody wants to be the company that blocks developers from using the tooling every other team already has.

Expect to see:

- Enterprise-grade MCP gateways and policy engines

- Vault-backed credential injection instead of static tokens

- Explicit capability whitelisting (“can read PR metadata but can’t merge branches”)

- Per-user audit logs and just-in-time permissioning

- Cloud providers baking MCP into IAM instead of treating it as an add-on

In other words, security will catch up because the demand is unavoidable. Once teams see what it feels like to have an AI agent that can actually query infrastructure, fix pipelines, refactor code, and open PRs — nobody will want to go back.

We’re watching the next interface layer of software take shape in real time, and yes, it’s messy, but it’s also the most exciting shift in developer tooling since GitHub, Docker, and LSPs.

And just like those, it’s going to feel obvious in hindsight.

🔮 So What Happens Next?

- Companies will publish MCP packages the same way they publish NPM modules

- Devs will start curating “MCP stacks” the same way we build dotfiles or Neovim configs

- AI tools will stop hallucinating because they’ll have real context, real APIs, real execution

- The default answer to “Can AI do that yet?” will switch from “Not really” to “Yeah, install the server”

And just like Docker Compose rewired how teams collaborate on infra, MCP might end up being the thing that rewires how we collaborate with AI.

We’re not replacing developers. We’re finally giving them superpowers.

Share this post

Related Posts

From Procrastination to Privacy-First Productivity (Part 2)

After building two open-source task managers to battle procrastination, I took it further — secure cloud sync, end-to-end encryption, and now an MCP server that connects your tasks to AI.

The New Abstraction Layer: AI, Agents, and the Leverage Moving Up the Stack

Andrej Karpathy put words to something many engineers are quietly feeling right now. We've been handed a powerful alien tool with no manual, and we're all learning how to use it in real time.

The Year Ahead in AI, Enterprise Software, and the Future of Work

From generative AI as a tool to AI as a strategic partner. Reflections on 2025 and what excites me most about 2026.