I just ran a 20-billion-parameter LLM entirely offline, from my Mac at home

Successfully running OpenAI's 20-billion-parameter open-weight model (gpt-oss-20b) locally on a Mac M4 Pro, highlighting the potential of offline AI that rivals cloud-based models without internet dependency or costs.

I just ran a 20-billion-parameter LLM entirely offline, from my Mac at home. 🤯

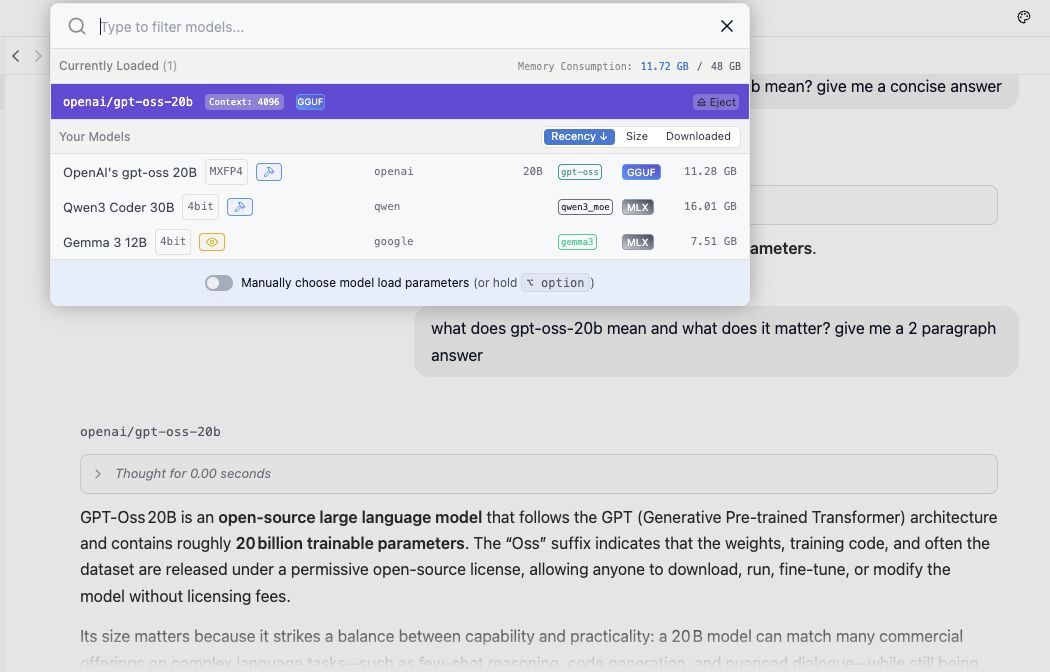

Figure 1: Local LLM configuration and setup

Figure 1: Local LLM configuration and setup

I've been experimenting with OpenAI's new open-weight model, gpt-oss-20b, on my Apple M4 Pro using LM Studio — and I'm genuinely impressed. This thing runs fast, respects your privacy (no data leaves your machine), and doesn't cost a cent beyond the initial download.

It holds its own against cloud-based giants like ChatGPT 5, especially for reasoning tasks, code generation, and even creative writing — all without an internet connection. In a world where AI often means "connect to someone else's infrastructure," this flips the script. You can build and test locally, no API calls or billing meters in sight.

There are tradeoffs — no live web data and some hardware requirements, but the benefits of local-first AI are growing fast. It feels like a glimpse into the future of decentralized intelligence.

💬 Are you running any open-weight models locally? I'd love to hear what's working for you and what you're building.

Share this post

Related Posts

Frontier Models, Small Language Models, and a New Middle Path

🤖 Frontier Models, Small Language Models, and a New Middle Path The most strategic AI decision isn't choosing the right model — it's knowing when generic intelligence isn't enough. Over the past...

My Journey to Trusting GenAI With More of My Code

How my workflow evolved from autocomplete magic to spec driven development, and why trusting GenAI with more of my code has changed how I build software.

The Rise of MCP Servers: Why Every Developer Will Have a Personal AI Toolchain

From AWS to GitHub to Podman, MCP servers are quietly becoming the new plug-in ecosystem for developers—and it’s changing how we work.